TLDR; High performance low cost sharing of static data with read-only persistent disk attachments

Both Google Cloud Compute Engine and Kubernetes Engine allow you to attach a Persistent Disk (PD) in either read-write or read-only mode. For the Compute Engine VM boot disk, you’ll usually create it from an existing image or snapshot and attach as read-write. Data disks on the other hand can be created empty, or seeded with data from an existing image or snapshot, and are usually also attached in read-write mode.

But what if your instance or container on GKE needs read-only access to [potentially Terabytes of] reference data? This is when a read-only PD can come in quite handy!

First though, you should seriously evalute solutions designed for sharing: Google Cloud Storage, perhaps using GCSfuse, Filestore with NFS, or Google Cloud NetApp Volumes with NFS and/or SMB. All of these can present data read-only to some clients, while allowing updates from others, and are generally easier to use and maintain.

If those solutions don’t work for you, perhaps because you need local filesystem semantics, you can get high-performance access without incremental cost using the read-only attachment mode like this:

A few things to be aware of:

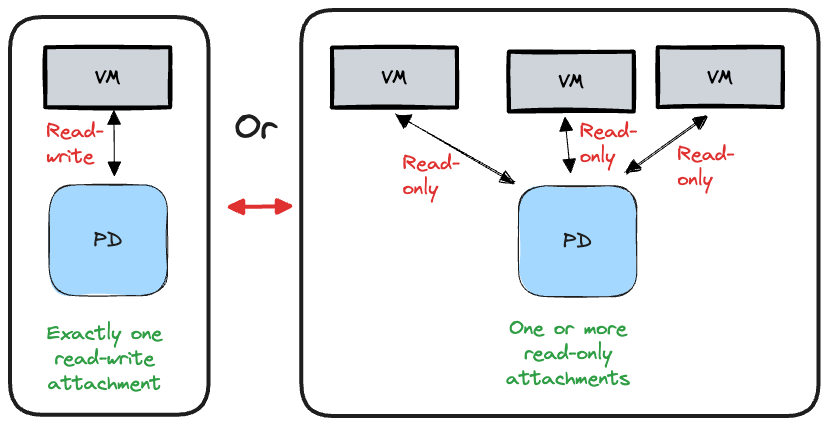

- Attachment modes are mutually exclusive: a disk is attached to up to one instance in read-write mode OR attached to one or more instances in read-only mode

- Standard HDD, Balanced SSD, and Performance SSD disk types support read-only attachments

- Google recommends no more than 10 read-only attachments per PD

- You will be charged for the capacity of the PD only; the read-only attachments are not charged

- Read-only ext3 and ext4 filesystems do not support journal playback to make the filesystem consistent. While the

noloadmount option can be used to skip loading of the journal, there may be filesystem inconsistencies that can lead to any number of problems. Unmounting the filesystem before creating a clone or snapshot avoids these issues.

While you can prepare a disk attached read-write on a single instance, detach, and attach as read-only on multiple instances, it’s usually easier to create a new one from a clone or [instant] snapshot of your original read-write attached disk.

There are some nuances depending on the Operating System, and if you’re running as VMs or on GKE. Let’s walk through three scenarios:

- Linux VMs and read-only PD attachments

- Google Kubernetes Engine (GKE) and read-only PD attachments

- Windows Server VMs and read-only PD attachments

Linux VMs and read-only PD attachments

Linux allows usage of read-only disks and filesystems via mount options. The steps are quite straightforward.

Assuming you already have a Linux VM running, create a new disk to hold the data you want to share and attach it to your VM. I really like the --device-name flag of the attach-disk subcommand as it gives us a predictable device file to use later:

gcloud compute disks create shared --type=pd-balanced --size=1024GB --zone=europe-west4-b

gcloud compute instances attach-disk base-vm --disk=shared --device-name=shared --zone=europe-west4-b

Login the VM, format the disk, copy your data to it, and then unmount it to ensure a clean filesystem is captured:

mkdir /shared

mkfs.ext3 /dev/disk/by-id/google-shared

mount /dev/disk/by-id/google-shared /shared

echo "shared data goes here" > /shared/data-to-share.txt

unmount /shared

Next create a new disk from it that will be shared to multiple VMs with a ro attachment

gcloud compute disks create shared-ro --source-disk=shared --zone=europe-west4-b

gcloud compute instances attach-disk worker1 --disk=shared-ro --device-name=shared-ro --zone=europe-west4-b --mode=ro

gcloud compute instances attach-disk worker2 --disk=shared-ro --device-name=shared-ro --zone=europe-west4-b --mode=ro

gcloud compute instances attach-disk worker3 --disk=shared-ro --device-name=shared-ro --zone=europe-west4-b --mode=ro

And then on each worker VM mount it readonly:

mkdir /shared-ro

mount -o ro /dev/disk/by-id/google-shared-ro /shared-ro

That’s it, you now have read-only access to the dataset on all your worker VMs!

If you want to be able to write to the disk, such as to run a pre-populated database for many training or test VMs, perhaps it could be combined with a writable layer using a union filesystem. This investigation is left as an exercise for the reader 😉.

Google Kubernetes Engine (GKE) and read-only PD attachments

Google Kubernetes Engine (GKE) includes the Compute Engine persistent disk CSI Driver to provision and manage storage. Storage can be requested using a PersistentVolumeClaim with accessModes ReadWriteOnce for a writable volume that can be mounted by a single node, and ReadOnlyMany for a read-only volume that can be mounted by multiple nodes. Similar to the other scenarios, it’s easiest to create a new disk to hold your source data as writable, write data to it, and then clone it to another disk for read only attachments. These steps can all be done directly by GKE. Let’s get started!

Create a GKE cluster and then install and configure kubectl access.

If you already have a disk with the data you want to share on it, check these instructions on how to bring it into Kubernetes. If not, prepare a writer container with some storage attached. Do this by creating a file named rw.yaml containing:

kind: Namespace

apiVersion: v1

metadata:

name: blog-ns

labels:

name: blog-ns

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: blog-ns

name: shared

spec:

accessModes:

- ReadWriteOnce

storageClassName: standard-rwo

resources:

requests:

storage: 1Ti

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: writer

namespace: blog-ns

labels:

app: writer

spec:

replicas: 1

selector:

matchLabels:

app: writer

template:

metadata:

labels:

app: writer

spec:

containers:

- name: writer

image: nginx:latest

volumeMounts:

- mountPath: /usr/share/nginx/html

name: mypvc

ports:

- containerPort: 80

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: shared

readOnly: false

This yaml contains three resource definitions:

Namespace: It’s a best practice to put resourecs in their own namespace, so lets create one calledblog-nsPersistentVolumeClaim: This is a request for a 1 TiB of balanced PD disk. TheaccessModesofReadWriteOncemakes it writable and able to be mounted by a single nodeDeployment: This is a spec for a simple container running an application that mounts the PersistentVolumeClaim

Apply the configuration using:

kubectl apply -f rw.yaml

Use kubectl get pods --namespace=blog-ns -o wide to check the details of the running pod. Normally you would have a real application that saves data to the volume that you want to share, but for demo purposes we’ll just save a text file to it and verify we can read it via the web server. Use the following and replace the writer-647fc7b778-cjgtx with the name of your pod:

kubectl exec writer-647fc7b778-cjgtx --namespace=blog-ns -- sh -c 'echo "shared data goes here" > /usr/share/nginx/html/data-to-share.txt'

kubectl exec writer-647fc7b778-cjgtx --namespace=blog-ns -- sh -c 'curl -s http://localhost/data-to-share.txt'

shared data goes here

Delete the writer deployment to ensure the disk and filesystem are unmounted and in a clean state:

kubectl delete deployment writer --namespace=blog-ns

Next, prepare some reader containers with a new cloned volume attached as ReadOnlyMany. Do this by creating a file named ro.yaml containing:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: blog-ns

name: shared-ro

spec:

dataSource:

name: shared

kind: PersistentVolumeClaim

accessModes:

- ReadOnlyMany

storageClassName: standard-rwo

resources:

requests:

storage: 1Ti

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: readers

namespace: blog-ns

labels:

app: nginx

spec:

replicas: 11

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

volumeMounts:

- mountPath: /usr/share/nginx/html

name: mypvc

ports:

- containerPort: 80

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: shared-ro

readOnly: true

This yaml contains two resource definitions:

PersistentVolumeClaim: This is a request for a 1 TiB of balanced PD disk. ThedataSourceparameter references the volume we created in therw.yamlspec and that disk will be cloned to create this new disk. TheaccessModesofReadOnlyManymakes it readable and able to be mounted by multiple nodesDeployment: This is a spec for a simple container running an application that mounts the PersistentVolumeClaim

Apply the configuration using:

kubectl apply -f ro.yaml

Use kubectl get pods --namespace=blog-ns -o wide to check the details of the running pods. All of these are accessing a read-only attachment of the cloned volume. Lets read the data we wrote earlier using the writer pod. Use the following and replace the readers-5dcb555946-4w988 with the name of your pod:

kubectl exec readers-5dcb555946-4w988 --namespace=blog-ns -- sh -c 'curl -s http://localhost/data-to-share.txt'

shared data goes here

Each node gets the full performance of the PD, allowing each of them to go fast! Keep in mind that balanced PD can be read-only attached to at most 10 nodes. If GKE tries to attach the volume to an 11th node you will see pods stuck in the ContainerCreating state with an error like this:

AttachVolume.Attach failed for volume "pvc-c86f1b46-88ed-4e9f-b3f4-b9eb8380a2c0" : rpc error: code = Internal desc = Failed to Attach: failed cloud service attach disk call:

googleapi: Error 400: Invalid resource usage: 'Attachment limit exceeded. Read-only persistent disks can be attached to at most 10 instances.'., invalidResourceUsage

If you run at a larger scale you can use multiple cloned disks, each of which can be attached to up to 10 nodes.

You can clean up by deleting the namespace using kubectl delete namespace blog-ns.

Windows Server VMs and read-only PD attachments

Windows Server supports read-only PD attachments but only if its on disk metadata has the Read-only attribute on the disk or volume set to True. This means that before you attach it as ro you need to set the Disk or Volume attribute to Read-only from a Windows Server perspective.

Lets assume you have a Windows VM running, with a PD named shared using a rw attachment. Open a command prompt as Administrator and set the data disk to be readonly using diskpart:

C:\>diskpart

Microsoft DiskPart version 10.0.20348.1

Copyright (C) Microsoft Corporation.

On computer: WIN-MAIN

DISKPART> list disk

Disk ### Status Size Free Dyn Gpt

-------- ------------- ------- ------- --- ---

Disk 0 Online 50 GB 0 B *

Disk 1 Online 1024 GB 1024 KB *

DISKPART> select disk 1

Disk 1 is now the selected disk.

DISKPART> attributes disk set readonly

Disk attributes set successfully.

Now you can clone the disk and attach it to other hosts with a ro mode attachment:

gcloud compute disks create shared-ro --source-disk=shared --zone=europe-west4-b

gcloud compute instances attach-disk win-student1 --disk=shared-ro --zone=europe-west4-b --mode=ro

gcloud compute instances attach-disk win-student2 --disk=shared-ro --zone=europe-west4-b --mode=ro

gcloud compute instances attach-disk win-student3 --disk=shared-ro --zone=europe-west4-b --mode=ro

If all went well, you should see your drive and large dataset mounted read-only on all three VMs!

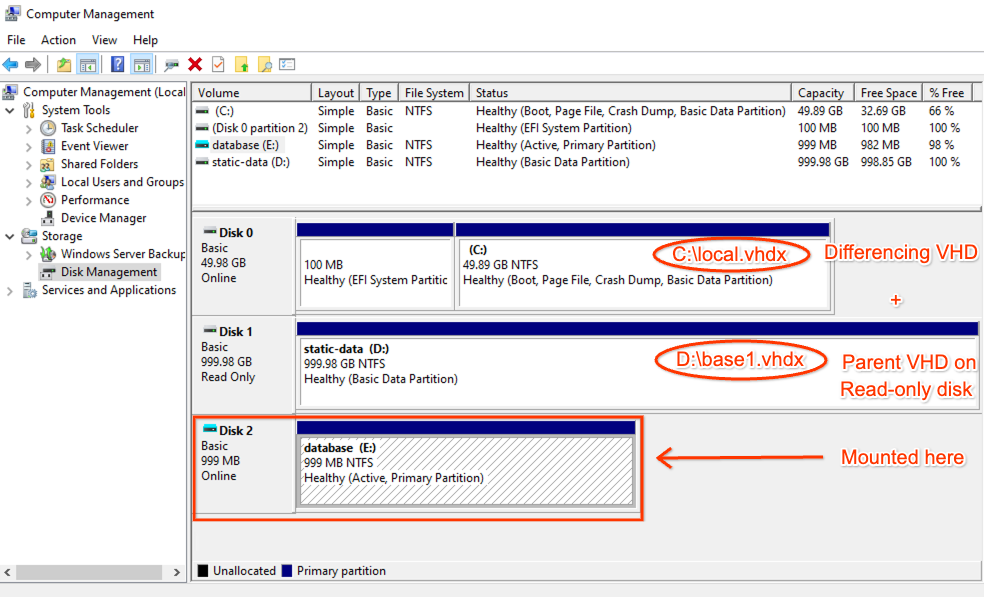

Bonus: Write access on Windows Server using a differencing layer on top of the read-only PD

A customer asked me if they could somehow use a read-only disk as the base for a writeable disk, something like a VMware linked clone, or Linux qcow disk, for use in a training environment. They had several large databases and wanted to avoid the cost for many full copies of it during the training sessions. While I don’t think such a feature exists in Windows Disk Management, we can use Microsoft’s Virtual Hard Disk (VHD) to do it. In this scenario you create a fixed VHD on your PD, load the data you want to share into the VHD, and then detach the VHD. Next set the Windows disk to be Read-only using diskpart and clone the PD to another PD and attach as ro to the student VMs. From the student VM, create a new differencing VHD on the boot drive with the parent VHD located on the Read-only drive. Voila, from a user perspective you now have a writable database disk but avoided the cost of many large dedicated PDs! From a performance perspective I didn’t see any significant penalty for adding in the VHD layer; Reads were based on the disk limits of the Read-only disk and writes based on the boot disk. Obviously this technique is not designed for performance or a long-running use case, but for some transient needs it could be useful.

Here are the steps to do it. First, create your parent VHD on the read-write PD using these diskpart commands:

create vdisk file="D:\base1.vhdx" type=fixed maximum=1000

select vdisk file="D:\base1.vhdx"

attach vdisk

create partition primary

active

assign letter=S

format quick fs=ntfs label=database

Now load the data you want to share onto the S: drive and detach that VHD. Then proceed with the steps explained earlier (i.e. set Read-only from Windows Server, clone the PD to a new one, and map it out to the student VMs).

Then, on the student VMs, create a new differencing VHD that is based on the parent VHD using these diskpart commands:

create vdisk file="C:\local.vhdx" parent="D:\base1.vhdx"

select vdisk file="C:\local.vhdx"

attach vdisk

You should see a new drive letter appear, and if you check the contents you’ll find a writeable drive with your database files in it:

For 10 students who need their own writable 1 TiB database this technique just avoided 9 TiB of disk costs!

As always, comments are welcome!