Building highly available and performant applications often requires shared storage accessible by multiple VMs. Traditionally shared storage has been made available to VMs in a single zone and was not fault tolerant to zone failure. Google Cloud’s Hyperdisk Balanced HA multi-writer technology solves this problem by combining cross-zone replication with multi-writer access from VMs across two zones.

This solution opens up new possibilities for applications requiring both resilience and performance including:

- Host based clusters: High availability designs that typically use Linux Pacemaker or Windows Server Failover Clusters to assign and move resources amongst nodes in the cluster. With Hyperdisk Balanced HA multi-writer those nodes can now be in two different zones and use Persistent Reservations to control access to shared-disk resources.

- Shared-disk filesystems: Shared storage designs supported by filesystems such as GFS2, OCFS2 and VMFS that manage access to shared-disk resources using clustering and/or locking capabilities for data coherence across nodes. Often combined with host based clusters.

If your shared storage needs can be met using NFS or SMB protocols I highly recommend using Filestore (single zone or HA across three zones) or NetApp Volumes (single zone or HA across two zones). Both of these provide check-box simplicity for shared storage requirements in a zone, or across zones. But, the blog today is about Hyperdisk Multi-writer so we’ll take the harder path and build something ourselves!

Building a GFS2 Shared Filesystem with Ubuntu

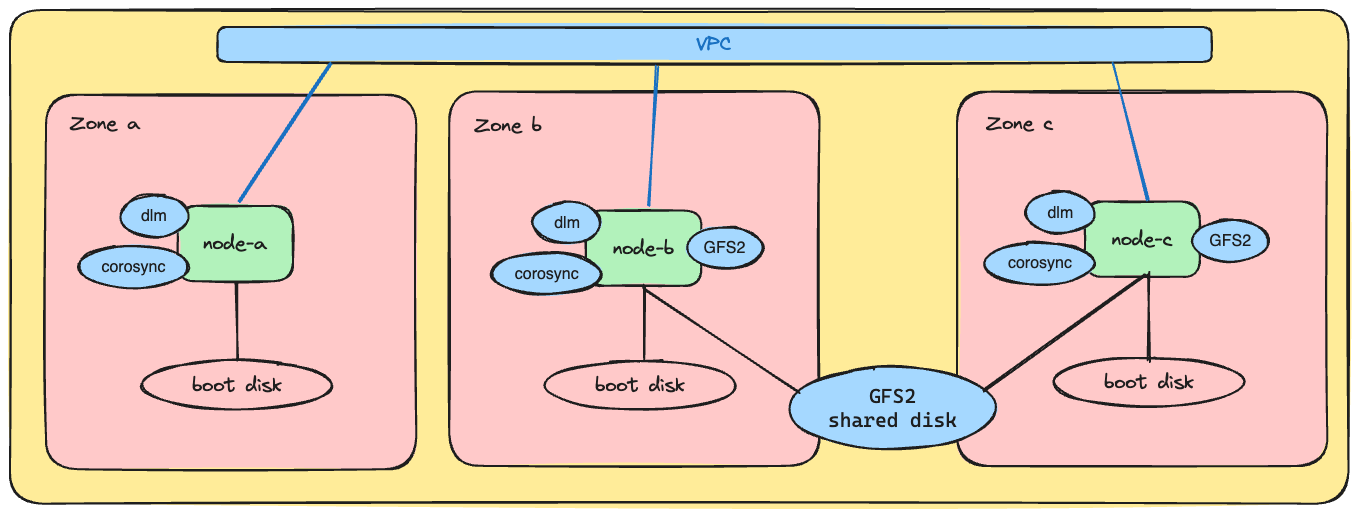

Solutions that use shared disks tend to be complex, and to focus on the core concepts I decided to build a basic GFS2 shared-disk filesystem across two VMs in different zones. With this solution an application running on each of those VMs can read and write data from the same filesystem concurrently, and a VM (or zone) can fail and the data is still available in the other. Filesystem coherency is managed by a cluster-wide locking scheme that uses DLM and Corosync to exchange locking information. I added a VM in a third zone to make locking services resilient.

The infrastructure can be visualized as:

Step by Step Instructions

| WARNING: These instructions are useful to prove the concept and NOT suitable for a production deployment. A production deployment would secure Corosync, likely add Pacemaker cluster software for coordinated resource management and fencing to increase availability and fault tolerance, use LVM, and include tuning of the DLM and GFS2 settings for the desired use case. |

Prepare the environment and create cloud resources

Assumptions:

- You are a project owner

- Avoid setting public IP addresses on resources, instead:

- Configure a Public NAT in the region enabling VMs to pull packages from the Internet

- Enable IAP for TCP forwarding and created a firewall rule allowing IAP connections to your VPC

Set environment variables for our desired location:

PROJECT=p20241007-gfs2-88 REGION=europe-west4The Dynamic Lock Manager (DLM) service and Corosync manage locking information between VMs in the cluster. Create a firewall rule to allow all communication between VMs when tagged with

gfs2cluster:gcloud compute firewall-rules create gfs2cluster \ --project=$PROJECT \ --direction=INGRESS --allow=all \ --target-tags=gfs2cluster \ --source-tags=gfs2clusterCreate three Ubuntu VMs, one in each zone of your selected region. These VMs will form the basis of the GFS2 cluster:

for ID in a b c; do gcloud compute instances create node-$ID \ --machine-type=c3-highcpu-8 \ --shielded-secure-boot \ --network-interface=stack-type=IPV4_ONLY,subnet=default,no-address \ --tags=gfs2cluster \ --project=$PROJECT \ --zone=$REGION-$ID \ --create-disk=auto-delete=yes,boot=yes,mode=rw,provisioned-iops=3000,provisioned-throughput=140,size=10,image-project=ubuntu-os-cloud,image-family=ubuntu-2404-lts-amd64,type=projects/$PROJECT/zones/$REGION-$ID/diskTypes/hyperdisk-balanced doneCreate a HdB-HA-MW disk with replicas in the

bandczones. We will use these zones for our GFS2 VMs and the VM in zoneafor distributed locking only.gcloud compute disks create hdb-ha-mw \ --project=$PROJECT \ --type=hyperdisk-balanced-high-availability \ --replica-zones=projects/$PROJECT/zones/$REGION-b,projects/$PROJECT/zones/$REGION-c \ --size=250GB \ --access-mode=READ_WRITE_MANY \ --provisioned-iops=6000 --provisioned-throughput=280Attach the disk to the

-band-cVMs.gcloud compute instances attach-disk node-b --disk=hdb-ha-mw --device-name=hdb-ha-mw --zone=$REGION-b --disk-scope=regional --project=$PROJECT gcloud compute instances attach-disk node-c --disk=hdb-ha-mw --device-name=hdb-ha-mw --zone=$REGION-c --disk-scope=regional --project=$PROJECT

Install and configure the VMs

Open a shell to each of the three VMs. Launching a SSH-in-browser tab from Cloud Console is easy.

On

node-ainstall modules needed for distributed locking and cluster communications:sudo apt-get update sudo apt-get install corosync dlm-controld -yOn

node-bandnode-cinstall modules for GFS2, distributed locking and cluster communications:sudo apt-get update sudo apt-get install corosync dlm-controld gfs2-utils linux-modules-extra-$(uname -r) fio -yCreate a Corosync config file based on your VMs. Update the below replacing the

nodeentries in thenodelistwith the FQDN and ip address of your VMs. Usehostnameandip addr showon each VM to get the needed values. Once you have adapted the config run the command to apply it on all three VMs:cat > corosync.conf <<EOF totem { version: 2 cluster_name: gcpcluster crypto_cipher: none crypto_hash: none } nodelist { node { name: node-a.europe-west4-a.c.p20241007-gfs2-88.internal nodeid: 1 ring0_addr: 10.164.0.7 } node { name: node-b.europe-west4-b.c.p20241007-gfs2-88.internal nodeid: 2 ring0_addr: 10.164.0.8 } node { name: node-c.europe-west4-c.c.p20241007-gfs2-88.internal nodeid: 3 ring0_addr: 10.164.0.9 } } quorum { provider: corosync_votequorum } system { allow_knet_handle_fallback: yes } logging { fileline: off to_stderr: yes to_logfile: yes logfile: /var/log/corosync/corosync.log to_syslog: yes debug: off logger_subsys { subsys: QUORUM debug: off } } EOF sudo cp corosync.conf /etc/corosync/corosync.confOn each VM restart

corosyncand verify all three nodes are members. Do not continue if the output does not show all three nodes as members:sudo systemctl restart corosync sudo corosync-quorumtool Quorum information ------------------ Date: Fri Oct 4 16:48:01 2024 Quorum provider: corosync_votequorum Nodes: 3 Node ID: 1 Ring ID: 1.12 Quorate: Yes Votequorum information ---------------------- Expected votes: 3 Highest expected: 3 Total votes: 3 Quorum: 2 Flags: Quorate Membership information ---------------------- Nodeid Votes Name 1 1 node-a.europe-west4-a.c.p20241007-gfs2-88.internal (local) 2 1 node-b.europe-west4-b.c.p20241007-gfs2-88.internal 3 1 node-c.europe-west4-c.c.p20241007-gfs2-88.internalOn

node-bcreate a GFS2 volume. The-targument includes thecluster_namewe configured in thecorosync.conffile, andsharedis a unique filesystem name. We set-j 2because we are sharing the disk with two VMs. Run this command and answer yes when prompted:sudo mkfs.gfs2 -p lock_dlm -t gcpcluster:shared -j 2 /dev/disk/by-id/google-hdb-ha-mwOn both

node-bandnode-ccreate a mount point and systemd service to mount it on boot because a standard entry in/etc/fstabis insufficient due to dependencies on DLM.sudo mkdir /shared cat > gfs2-mount.service <<EOF [Unit] Description=Mount GFS2 Filesystem After=network-online.target After=dlm.service [Service] Type=oneshot RemainAfterExit=yes ExecStart=/bin/mount -t gfs2 -o rw,noatime,nodiratime,rgrplvb /dev/disk/by-id/google-hdb-ha-mw /shared ExecStop=/bin/umount /shared [Install] WantedBy=multi-user.target EOF sudo cp gfs2-mount.service /etc/systemd/system/gfs2-mount.service sudo systemctl enable --now gfs2-mount.serviceTo ensure all required services start correctly on boot, stop and start all VMs from the Cloud Console or from the CLI using:

for ID in a b c; do gcloud compute instances stop node-$ID \ --project=$PROJECT \ --zone=$REGION-$ID done for ID in a b c; do gcloud compute instances start node-$ID \ --project=$PROJECT \ --zone=$REGION-$ID \ --async done

Mount and use the shared filesystem

Re-open shells to each of the three VMs. From

node-brelax filesystem permissions to ease access and then start a simple workload:sudo chmod 777 /shared cd /shared watch -n 1 "echo -n $(hostname) ' ' >> date.out; date >> date.out"On

node-cread the file that is being appended to bynode-b:cd /shared tail -f date.out node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:20:49 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:20:50 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:20:51 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:20:52 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:20:53 UTC 2024Let’s test this shared filesystem by running the same workload, on the same file, from

node-c. This will demonstrate how GFS2’s locking mechanisms ensure data integrity:watch -n 1 "echo -n $(hostname) ' ' >> date.out; date >> date.out"Open another shell to

node-band follow the file to observe writes from both hosts are intermixed:tail -f /shared/date.out node-c.europe-west4-c.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:49 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:49 UTC 2024 node-c.europe-west4-c.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:50 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:50 UTC 2024 node-c.europe-west4-c.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:51 UTC 2024 node-b.europe-west4-b.c.p20241007-gfs2-88.internal Mon Oct 7 16:22:51 UTC 2024Now it’s time to test the performance. Stop the

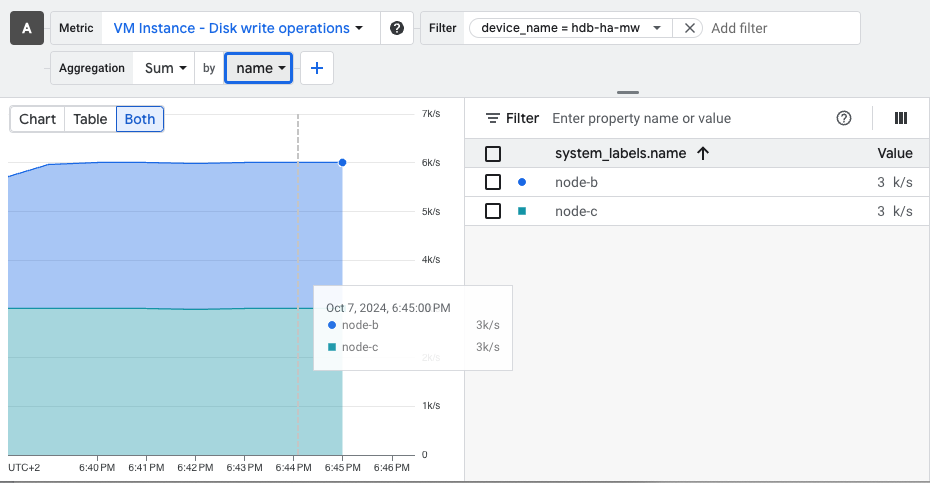

datecommands and use thefiocommand below to start a write IOP workload from each VM concurrently. Observe each VM achieves half the disk provisioned IOPs, or 3K IOPs in our case, with similar latencies:fio --name=`hostname -s` --size=1G \ --time_based --runtime=5m --ramp_time=2s --ioengine=libaio --direct=1 \ --verify=0 --bs=4K --iodepth=2 --rw=randwrite --group_reporting=1 node-b: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=2 fio-3.36 Starting 1 process Jobs: 1 (f=1): [w(1)][100.0%][w=11.7MiB/s][w=3001 IOPS][eta 00m:00s] node-b: (groupid=0, jobs=1): err= 0: pid=2501: Mon Oct 7 16:42:21 2024 write: IOPS=3016, BW=11.8MiB/s (12.4MB/s)(3535MiB/300001msec); 0 zone resets slat (nsec): min=1919, max=150331, avg=4637.11, stdev=2043.55 clat (usec): min=404, max=29166, avg=657.96, stdev=192.52 lat (usec): min=407, max=29171, avg=662.60, stdev=192.85 clat percentiles (usec): | 1.00th=[ 474], 5.00th=[ 506], 10.00th=[ 529], 20.00th=[ 553], | 30.00th=[ 570], 40.00th=[ 586], 50.00th=[ 611], 60.00th=[ 627], | 70.00th=[ 652], 80.00th=[ 685], 90.00th=[ 955], 95.00th=[ 1123], | 99.00th=[ 1270], 99.50th=[ 1319], 99.90th=[ 1483], 99.95th=[ 1713], | 99.99th=[ 2671] bw ( KiB/s): min=11704, max=14024, per=100.00%, avg=12068.29, stdev=300.79, samples=600 iops : min= 2926, max= 3506, avg=3017.06, stdev=75.19, samples=600 lat (usec) : 500=4.09%, 750=82.75%, 1000=3.81% lat (msec) : 2=9.32%, 4=0.03%, 10=0.01%, 20=0.01%, 50=0.01% cpu : usr=0.32%, sys=1.57%, ctx=596311, majf=0, minf=37 IO depths : 1=0.0%, 2=100.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=0,904999,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=2 Run status group 0 (all jobs): WRITE: bw=11.8MiB/s (12.4MB/s), 11.8MiB/s-11.8MiB/s (12.4MB/s-12.4MB/s), io=3535MiB (3707MB), run=300001-300001msec Disk stats (read/write): nvme0n2: ios=0/911244, sectors=0/7289952, merge=0/0, ticks=0/594190, in_queue=594190, util=98.94%Use Cloud Monitoring to verify the per attachment IOPs

compute.googleapis.com/instance/disk/write_ops_countmetric, filtered ondevice_name=hdb-ha-mw, aggregated by system metadata labelname:

At this point you are free to try other workloads, reboot to simulate failure modes, and in general experiment with the ins and outs of a shared-disk filesystem. When you are done, delete the VMs and the shared disk to clean up.

Conclusion

The ability to replicate data across zones, coupled with the multi-writer access mode, makes Hyperdisk an ideal solution for applications demanding both high availability and performance. This blog post demonstrated a basic GFS2 implementation, but the possibilities extend far beyond. Hyperdisk Balanced HA multi-writer provides a foundation for building resilient and scalable applications on Google Cloud.

As always, comments are welcome!